Self hosting with Caddy, gitea, hugo, bitwarden, and more!

I have always wanted to try self-hosting things that are clearly better done by a SaaS provider. That’s why I took a few hours, a big ol' Ubuntu VPS, and a domain name to try and self-host a bunch of things I use every day! I might hate myself later, but I’m having fun for now. I decided to write a little bit about what I did to make everything work.

UFW (Uncomplicated Firewall)

If I had gone with a more fully-featured cloud hosting provider, such as DigitalOcean or Linode (not affiliated with either), I would have been able to configure my VPS’s firewall through a UI console. However, I had already purchased a really large server for cheaper with another provider. This meant I needed to set up my firewall right on the server myself. As a software developer, I am horrible at SysAdmin by nature; the idea of setting up critical iptables shook me to my very core. This was why I chose to configure my firewall with the easier to use ufw.

The setup I needed was as follows: deny all incoming traffic by default, allow all outgoing by default, then allow traffic on the ports I needed (namely SSH, HTTP, and HTTPS).

What scared me the most was potentially locking myself out of my server. Working directly with iptables put me at risk of this, as iptable rules operate at the kernel level. If I changed one wrong thing, I could completely lock myself out of my server. ufw didn’t have this problem, because it runs as a service; I could configure all of my ufw and start the service when I felt everything was ready. I did a dry-run on a temporary tiny VPS to make sure I wouldn’t lock myself out (sudo removed for brevity):

ufw default deny incoming

ufw default allow outgoing

ufw allow ssh

ufw allow http

ufw allow https

ufw allow 25565 # I have a Minecraft server running on this machine already!

ufw enable

After doing this, I disconnected and tried to ssh into the server again. It worked as expected, and now that I’d verified it worked on the test VPS, I ran them on my main VPS with similar success.

Time to come clean though; this wasn’t the first thing I did. This was actually one of the last things I did (hence why I was extra scared of locking myself out). The main reason I did this was to make sure my server wouldn’t accept connections to http://<ip>:<port>. This worked for some of my services, but not for one of them. The reason it didn’t work is because ufw by default cannot stop Docker from accepting connections directly to published ports. On install, Docker makes entries in the iptable rules that are evaluated before ufw’s. I tried a veritable cornucopia of bad solutions before finding this repo that provided the perfect solution for me.

With my server locked down (let’s pretend that’s the first thing I did, like it should have been) it was time to move on to the server I decided to use for reverse proxying.

Caddy

In my previous attempts at hosting things myself, I had fumbled through nginx reverse-proxy tutorials. While nginx is a great skill to learn, and an incredibly mature tool, I decided to take a different route this time and use Caddy. I acknowledge that nginx is great technology, but after using it on this server I am officially sold on Caddy.

The two reasons I love Caddy are its super easy configuration and its automatic https. The biggest challenges I had with hosting things myself in the past is partially my poor nginx configuration abilities, but largely that messing with certbot (an admittedly great and easy to use project) was a lot more work than I wanted to constantly manage for every single project that I wanted to host. HTTPS is a process that can be automated, and Caddy proves that. Now, I simply add a new site configuration block to my Caddyfile and I already automatically have HTTPS for that site (provided the domain name I specified has DNS configured correctly, more on that later).

I installed Caddy on my system through the stable apt repo. I used it as a systemd service, and added configuration to the /etc/caddy/Caddyfile. When I mention “adding something to the Caddyfile” further down this article, I am referring to editing this file and running sudo systemctl restart caddy to reload the configuration.

Gitea

The first thing I wanted to set up was my own git server! I used a fantastic open-source project called Gitea that replicates a lot of GitHub’s features. It’s missing some of the more advanced GitHub features, but as a place to toss my personal project code it seemed perfect.

I installed Gitea through a user-maintained deb package. Honestly, if I were starting from the top, I probably would just install it through Docker, but this is working fine for now anyway. Installing this package created a gitea user for me, so I created the necessary directories from this Gitea tutorial and gave the gitea user access instead of the git user that these docs suggest manually creating. The rest of the steps from then on in the docs ended up working for me. So now I was able to run the gitea systemd service on port 3000. The next step was to set up the reverse proxy so I could get into my Gitea instance.

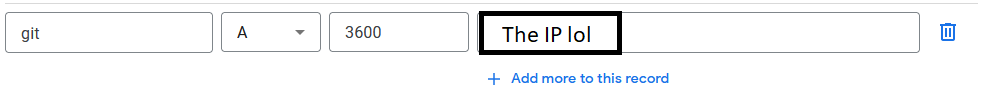

I have a domain (ragecage64.com) with Google Domains, however I don’t use anything specific to that system. All I had to do was a DNS Address record for the git subdomain I wanted. It looked something like this:

Once this was set up, I added the following block to my Caddyfile:

git.ragecage64.com {

reverse_proxy localhost:<gitea port>

}

After restarting caddy, I had https://git.ragecage64.com ready to go! There was a first-time set up screen that I forgot to take a screenshot of, but is pretty self-explanatory. I spent a little bit of time selectively migrating the repos I wanted to keep to my new Gitea instance, and setting up my SSH key and new username. Really loving Gitea so far!

Get files from Gitea repos within the server

This was an important step to the next couple things I’m going to talk about. Once things are pushed to my Gitea instance, I’m able to access those files on the server to perform any kinds of builds I may need to run them.

To do this, figure out where you Gitea is storing its repos (for me it was in the default directory /var/lib/gitea/data/gitea-repositories/<gitea username>). In this folder you can find the bare repositories. To get the data from these bare repositories from any where on your server, you can clone the bare repository, i.e. git clone $GITEA_REPO_DIR/<your repo>.git. Now you have a copy of the code on your server to do with whatever you please.

Bub the Discord Bot

This was probably the easiest thing to set up. My bot is written in Go, meaning all I need is a Discord Bot Token in my local env and to run the compiled program. First, I pushed the bot code to my Git. Then I pulled the bare repo on my server, ran the command in the Makefile, and ran the compiled binary. Pretty simple setup!

The challenge came when I needed to run multiple apps at once on my server. The more correct thing would probably be to create systemd services out of everything, but that’s hard. :)

The two things I needed to run and quickly get at logs for are my Minecraft server and Bub. I used multiple GNU screen sessions to accomplish this. I started named screen sessions like so:

screen -S minecraft

Once I created the screen session, I ran the server and detached from the session with Ctrl+A, D.

Then when I needed to reattach to the screen, I could use the command:

screen -xS minecraft

I did the same thing with my bot. Pretty good setup overall!

This Blog

I am now also hosting this blog on my server! This blog is a static site made with Hugo, which I highly recommend if you’re looking to make a blog. The great thing about this was that a static site is similarly easy to host through Caddy!

I started by installing hugo, cloning the bare repo (I had to --recurse-submodules because I installed a theme, important step), and running hugo. This built my site to the public folder (optionally, could output this public folder to a smarter place in the server). Next, I added the following block to my Caddyfile:

blog.ragecage64.com {

root * <full path to site folder>/public

file_server

}

And added the similar DNS record as above.

Now I have the site you are currently on! To update my blog now I push to my repo, pull the server copy of the repo, and run hugo. A bit more work than GitHub Pages where I previously hosted this site, but every part of this is more work than it used to be and I’m still having fun!

BitWarden

The last thing I got working was my own BitWarden instance to share with my partner and family. To do this, I decided to run a docker container of the Rust implementation of Bitwarden. I created a docker-compose file for the container (which maybe wasn’t necessary because I’m just using SQLite anyway, but that makes it easier to add a real DB later) and ran it in the background with docker-compose up -d. I then created a DNS record and Caddy reverse proxy similar to Gitea above, and followed the instructions to connect to BitWarden clients to my instance. When I first started the instance, I used the container environment variable SIGNUPS_ALLOWED=true. This allowed me and my partner to quickly sign up, before I restarted the container with this environment variable set to false. This means only the people I want to sign up for my instance can; it’s only on a SQLite database, it’s not exactly web scale!

Who knows what else!

Now I have an easy to way to host any future projects on one server! It’s pretty exciting, and I don’t know what’s going up next, but next time I think of something exciting it’s fun to know I always have somewhere to put it!